Implementing real-world optimisation use cases in state-of-the-art quantum devices

- Date

- Reading time

- 8 minute

- Author

- Ezequiel Ignacio Rodríguez Chiacchio

This project addresses the use of quantum technologies to tackle real-world optimisation problems. Our work explores the capabilities of today’s quantum computers and exemplifies a problem class whose implementation can be extended to find commercial applications across different areas.

Combinatorial optimisation problems arise in many different fields, including medicine, finance, engineering, biology, and a host of key industries. Optimal solutions are often difficult to obtain, and more so as the size of the problem grows. This makes it hard for industries to find good solutions to meet business demands, positioning ideal network locations, or optimising routing systems.

Quantum computing offers a new paradigm to tackle hard optimisation problems. This involves algorithms capable of attaining computational speedups when seeking optimal solutions, as well as novel heuristic approaches suited to today’s noisy quantum hardware. For the industrial success of quantum computing, it is vital to understand how to best leverage current devices to implement and solve problems from real use cases, allowing us to identify any relevant obstacles and design strategies to overcome them.

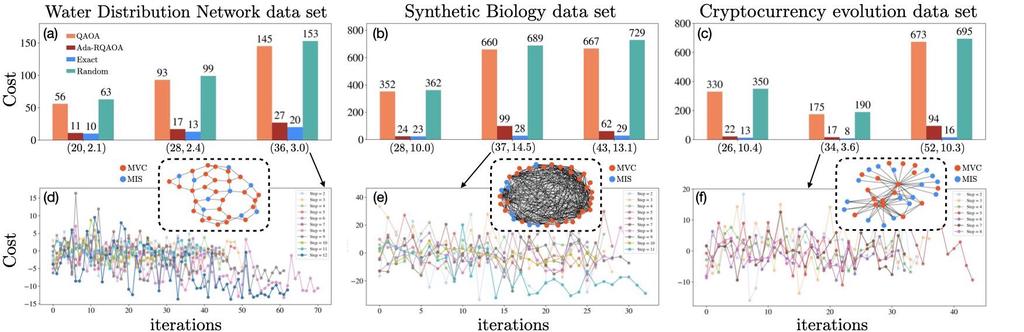

In this work, we partnered with Rigetti Computing to investigate the capabilities of quantum hardware to address a paradigmatic optimisation problem: finding the minimum vertex cover of a graph. To achieve this goal, we employed the Aspen-M-2 chip, a state-of-the-art quantum device developed by Rigetti Computing, and extracted problem instances from real data sets in the areas of biotechnology, finance and network systems.

Minimum Vertex Cover

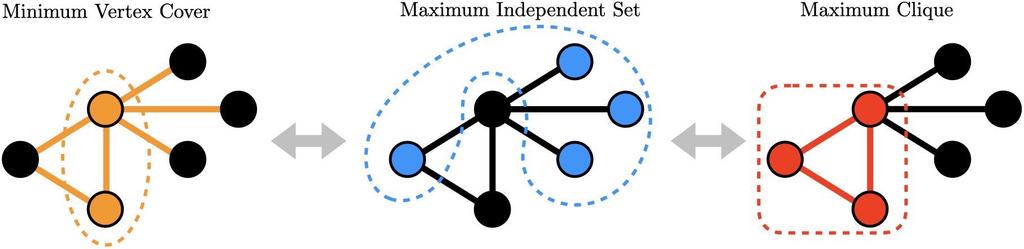

The Minimum Vertex Cover (MVC) of a graph is defined as the smallest set of nodes connected to every edge in the graph. Computing the MVC has been shown to be a generically NP-hard problem.

This task is also connected to two other commonly occurring graph optimisation problems: finding the Maximum Independent Set (MIS), the largest set of disconnected nodes in a graph; and finding the Maximum Clique (MC), the largest set of fully-connected nodes in a graph. In real-world situations, specific problems may arise as an instance of one of the three, but they can always be recast as an instance of another. Hence, one can equivalently speak of solving for the MVC, the MIS or the MC.

Real World Use Cases

We chose to focus on the MVC problem to explore commercial applications across a wide variety of fields. Our problem instances were sourced from three different areas in which the MVC, MIS and MC emerge naturally:

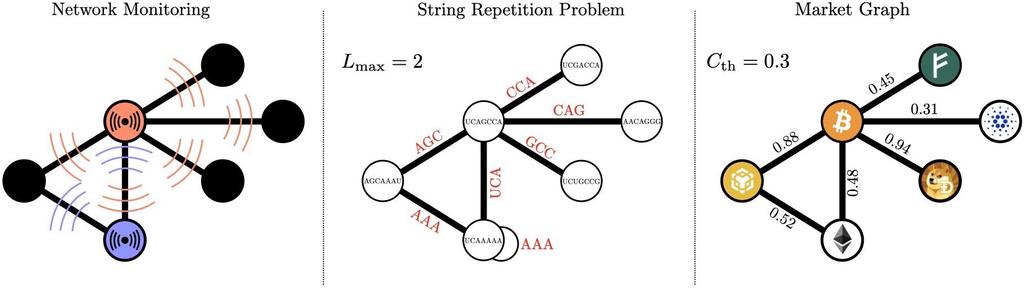

Water distribution networks: Given their physical structure, these systems are usually represented by graphs. A common problem that arises in this field is how to optimally distribute sensors to monitor the network. This translates into an MVC problem, where the nodes belonging to the MVC determine where the monitoring units should be placed.

Synthetic biology: In this field, genetic constructs are often designed and built by combining DNA sequences known to have particular functions. Generating synthetic DNA constructs that involve many parts with similar sequences can lead to cell stability problems. Therefore, one key challenge is to build libraries of DNA parts whose sequences are as diverse as possible. Given a set of DNA parts as strings, this problem can be formulated as finding a MIS, by mapping the strings into nodes of a graph and adding edges between them whenever the strings share more letters than a specified length.

Portfolio Allocation: One approach for designing a portfolio allocation strategy is to make use of market graphs. A market graph is constructed by mapping a set of stocks into the nodes of a graph and drawing edges between them whenever their correlation over a certain period of time is larger than a fixed threshold. A straightforward application of market graphs is to obtain the maximal number of correlated stocks, which maps onto finding the MC of the graph.

Problem Implementation

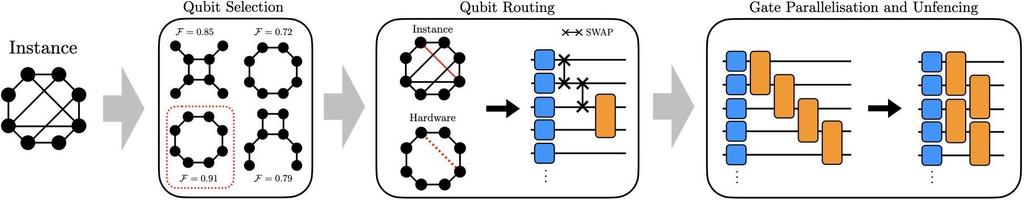

We implemented the problem instances on the hardware following three steps:

Qubit selection: From all possible sets of qubits that could host the problem instance, we kept the set with the highest average fidelity.

Qubit routing: Using SWAP operations, we generated the connections present in the instance but not on Aspen-M-2.

Gate parallelisation and unfencing: We applied the pulse-level control tools hosted by Rigetti’s software package, Quil-T, to enable simultaneous execution of the parallelised circuit gates.

Additionally, we included an error mitigation protocol (SPAM Twirling ) to remove spurious correlations between qubits during the preparation and measurement stages.

Finding the MVC using quantum computing

We employed two different algorithms to find the MVC: the Quantum Approximate Optimisation Algorithm (QAOA), the go-to algorithm for optimisation with noisy quantum computers; and its recursive version (RQAOA), which reduces the size of the problem recursively until it is small enough that it can be solved exactly. In particular, we developed an adaptive version of RQAOA (Ada-RQAOA) that enables a faster decrease of the problem size, while maintaining comparable levels of performance.

Our experiments revealed a notable superiority of Ada-RQAOA over QAOA, with the former getting very close to the optimal solutions in certain cases and the latter barely surpassing the performance of averaging over many random solutions. This predominance follows from Ada-RQAOA constructing a solution using only what is considered to be the most reliable information during multiple recursive runs, while QAOA makes an estimation solely from a single run.

Hardware Performance

The quality of the experiments is limited by the coherence times of the hardware. To maximise the performance of quantum computing, it is essential to minimise the depth of the circuit to operate within the permitted time scale.

We found three major factors that need to be addressed when implementing generic problem instances:

Qubit selection criteria: In a real device, each qubit has a different coherence time. We found that a poor choice of qubits can dramatically impact the performance. By selecting sets with the highest fidelity we obtained good results, but we expect other aspects, such as the role of specific qubits in the routing schedule, to be also of high importance.

Number of edges in the instance: Naturally, a large number of edges will result in a deeper circuit to implement. Accordingly, for larger/denser instances, we observed a decline in the performance of the algorithms. Based on our experiments, we estimate that reasonable performances can be obtained up to ∼150 edges.

Optimality of routing schedule: There are multiple ways of swapping qubits to satisfy the structure of the instance at hand. Doing so in a sub-optimal way inevitably results in longer circuit execution times. We thus performed an additional optimisation routine to minimise the number of SWAP operations required to build the schedule. Nevertheless, for certain instances, we only observed a clear optimisation process once Ada-RQAOA had reduced the size enough such that, even after routing, we were operating within the average coherence time.

Conclusion

This study explores the potential for quantum computing to play an increasing role in tackling hard optimisation problems. We illustrate key methodologies and challenges associated with executing QAOA and related algorithms at scale.

On the one hand, we have performed one of the first end-to-end explorations of RQAOA on quantum hardware. Ada-RQAOA, our adaptive modification of the algorithm, enabled us to explore instances with up to ~50 nodes. On the other hand, we identified three factors that have a strong impact on the device performance: the number of edges in the problem instance, the quality of the routing schedule, and the qubit selection criteria. Being able to handle denser instances for larger problems will become increasingly feasible as devices evolve towards longer coherence times and higher gate fidelities.

Our work constitutes an important step towards large-scale quantum computers addressing complex industrial problems. Now is an opportune time for organisations to collaborate with quantum developers and investigate how this technology can become a powerful tool in their problem-solving strategies.

A full technical report of our findings will be released as a publicly available article in the coming months.

Acknowledgements

We would like to thank our collaborators Mark Hodson and Eric Ostby from Rigetti Computing, as well as our team at Entropica Labs.